Comparing Kubernetes managed services across Digital Ocean, Scaleway, OVHCloud and Linode

Table of Contents

This article was updated:

15/06 to add an SLA & Support section (thanks T from the DevOps’ish Telegram group for the great idea); add the just released option to deploy Traefik v2 with Kapsule; explicitly mention that instance pricing is hourly

19/06 to update Kapsule’s latest available version (v1.18.4, less than 22 hours after public release!)

21/07 to mention Scaleway’s price hike and newer Kapsule versions

Introduction

Everybody knows about the “big three” cloud providers - Amazon Web Services, Google Cloud Platform and Microsoft Azure. However, there are a few other, less known competitors, which provide fewer services, but have some advantages (most notably in terms of cost and complexity) and are perfectly viable choices, depending on size and requirements. In recent years, with the advent of Kubernetes, the features gap is being bridged because with a managed k8s service and some related ones (load balancers, block and object storage, managed databases) one can go a long way before needing the full might of AWS’ 175 (at time of writing) services. And thanks to the ubiquity of Kubernetes, migrating to a new, more feature complete cloud provider’s isn’t that complex ( a lot less than if you have custom orchestration and cloud-specific deployment logic), if you do someday outgrow your current choice.

Especially when starting (be it in a hobby capacity, a side project, or a start-up), relatively simple configuration and pricing can be a huge advantage. Everybody could do with not needing schematics like this one by Corey Quinn:

In this article I’d like to compare how some of those smaller, more developer-oriented providers fare in terms of managed Kubernetes and associated services. I’ll also throw in a brief comparison to AWS or GCP from time to time to put things in perspective.

Note: When I say “managed Kubernetes service”, I mean a service where the master nodes (Kubernetes control plane) are hosted and managed by the provider. It’s not about Container as a service services like AWS Fargate, Google Cloud Run or Scaleway Serverless where Kubernetes might be running behind the scenes, but you only deal with it via an abstraction layer (like Knative) at the container level.

Criteria

- Kubernetes features

- complexity

- tooling

- ecosystem

- cost

Competitors

I’ll compare Digital Ocean, Scaleway, OVHCloud and Linode, some of the most popular traditional VPS providers, which have also branched out into the managed Kubernetes space, keeping with their developer-oriented, low cost, low complexity model. Why only them? They were the only ones I could find within the first two pages of Google search that weren’t enterprise-only (IONOS) or too similar in model, complexity and pricing to AWS/GCP/Azure (IBM, Oracle). Civo was also pointed out to me on the DevOps’ish Telegram group, but they’re only in private beta.

Brief history and overview

Digital Ocean

Founded in 2011 as a VPS provider (“droplets” in DO parlance), their product range has been extended to add managed databases (PostgreSQL, MySQL and Redis), managed Kubernetes (main focus of this article), load balancers and object storage (Spaces). They have mostly a good reputation and are easy to use. Currently they have 8 locations around the world (New York and San Francisco in the USA, Amsterdam in the Netherlands, Singapore, London in the UK, Frankfurt in Germany, Toronto in Canada and Bangalore in India).

Scaleway

Formerly a sub-brand of the Online.net (founded in 1999) part of the French Iliad group which offered custom-made ARM servers, since last year it’s the main brand for their public cloud offerings, which includes bare metal servers, traditional VPSes (x86), object storage, archival storage, managed Kubernetes (Kapsule, which I’ve explored before on my blog, serverless offerings (Functions and Containers as a service), IoT/message queue, managed databases (PostgreSQL and soon MySQL), load balancers and soon AI inference. Currently they have 2 locations (Paris, France and Amsterdam, Netherlands), with a third one in Poland coming sometime in 2020, and others planned for “Asia and Latin America” “by end-2020”.

OVHCloud

Founded in 1999 and formerly known as OVH, they started as a managed hosting and VPS provider, and have expanded in telecoms, managed OpenStack, VMware vSphere and Kubernetes, email hosting, managed databases (MySQL, MariaDB, PostgreSQL, Redis), load balancers, Anti-DDoS, Data and Analytics platform. It’s by far the biggest of the bunch, in terms of services, clients and locations (Roubaix, Strasbourg, Gravelines, Paris in France, Franfkurt in Germany, Warsaw in Poland, London in the UK, Beauharnois in Canada, Vint Hill in the USA, Singapore, Sydney in Australia).

Linode

Created in 2003 as a VPS provider (“Linodes” in Linode parlance), Linode have grown to also provide object storage, load balancers and Kubernetes.

Note: not all of the locations listed for the different cloud providers have their respective managed Kubernetes service

Getting started

Creating an account

Creating an account is basically the same thing across all four providers - sign up, confirm your email, set up a payment method, done.

Digital Ocean and OVHCloud require you create a “project” to organise your resources. In DO, that’s just an organisational unit to group certain types of resources (but not Kubernetes clusters, for instance), and makes no difference, functionalities or billing-wise. In OVHCloud, a “public cloud project” is the level on which you interact with billing, quotas and IAM (Identity and Access Management, users and their rights).

Sharing access

In Digital Ocean you can create teams and add users with different access levels (which boil down to owner with full access, billing access, and access to DO resources); on OVHCloud the same can be done on the “public cloud project” level (with similar roles for billing and developers); Linode allows adding users with detailed ACLs (create a new linode, power on/off, create images, delete, etc.). Scaleway used to be the only ones without anything similar, but they recently added Organizations in beta, which allow sharing access at different levels (similar to DO) with different team members.

Navigating the web UI

All of the web interfaces are pretty similar - clean, with a lot of information provided at a glance and many actions readily available, with the exception of OVHCloud (I’ll get into the disaster it is in a bit).

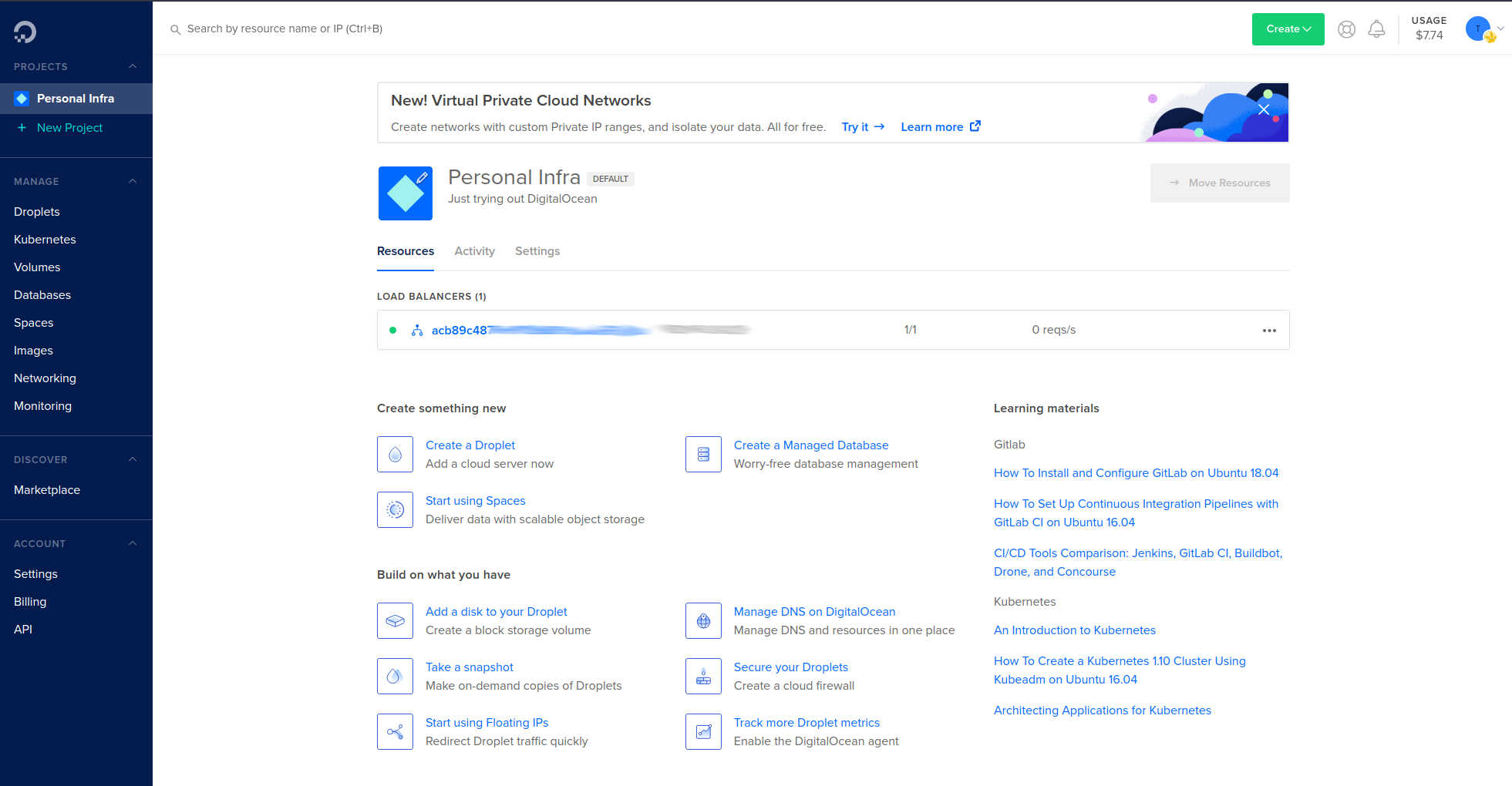

Digital Ocean

Simple, elegant, everything is there at a glance. You can see billing usage, create new resources, check out documentation, switch projects and accounts/teams from the dashboard, and there’s a menu with all services on the left. Each resource creation presents an estimate of the monthly bill for it, which is pretty nice to visualise. You can search by name or IP. On each droplet’s page there is integrated monitoring with basic CPU, memory, load, disk, disk I/O, bandwidth graphs and basic alerting rules; backup and restore options (including a virtual console or boot from a recovery ISO). Overall, great UX.

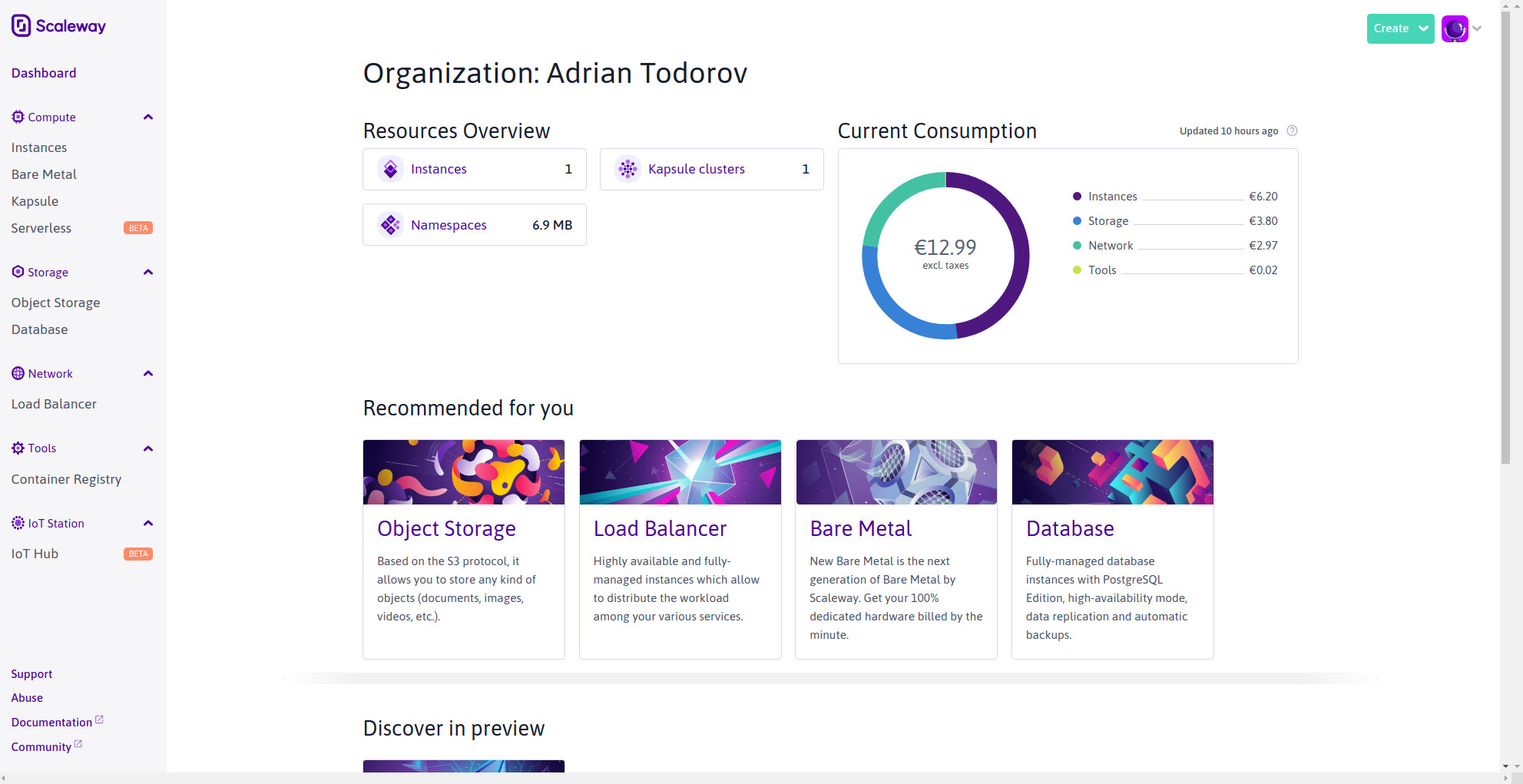

Scaleway

Pretty similar to the Digital Ocean - simple, clean, everything is easily accessible. There’s a “Create” button up top, a detailed resources and billing overview, links to documentation, and a menu on the side with all services. You can attach a virtual console to the instances (requires a user with password auth enabled though), use cloud-init for the initial bootstrap, “protect” them to prevent accidental halt/deletion, and every creation previews an estimated monthly and hourly price. Each resource page (compute, object storage, etc.) has direct links to the associated documentation, which is handy.

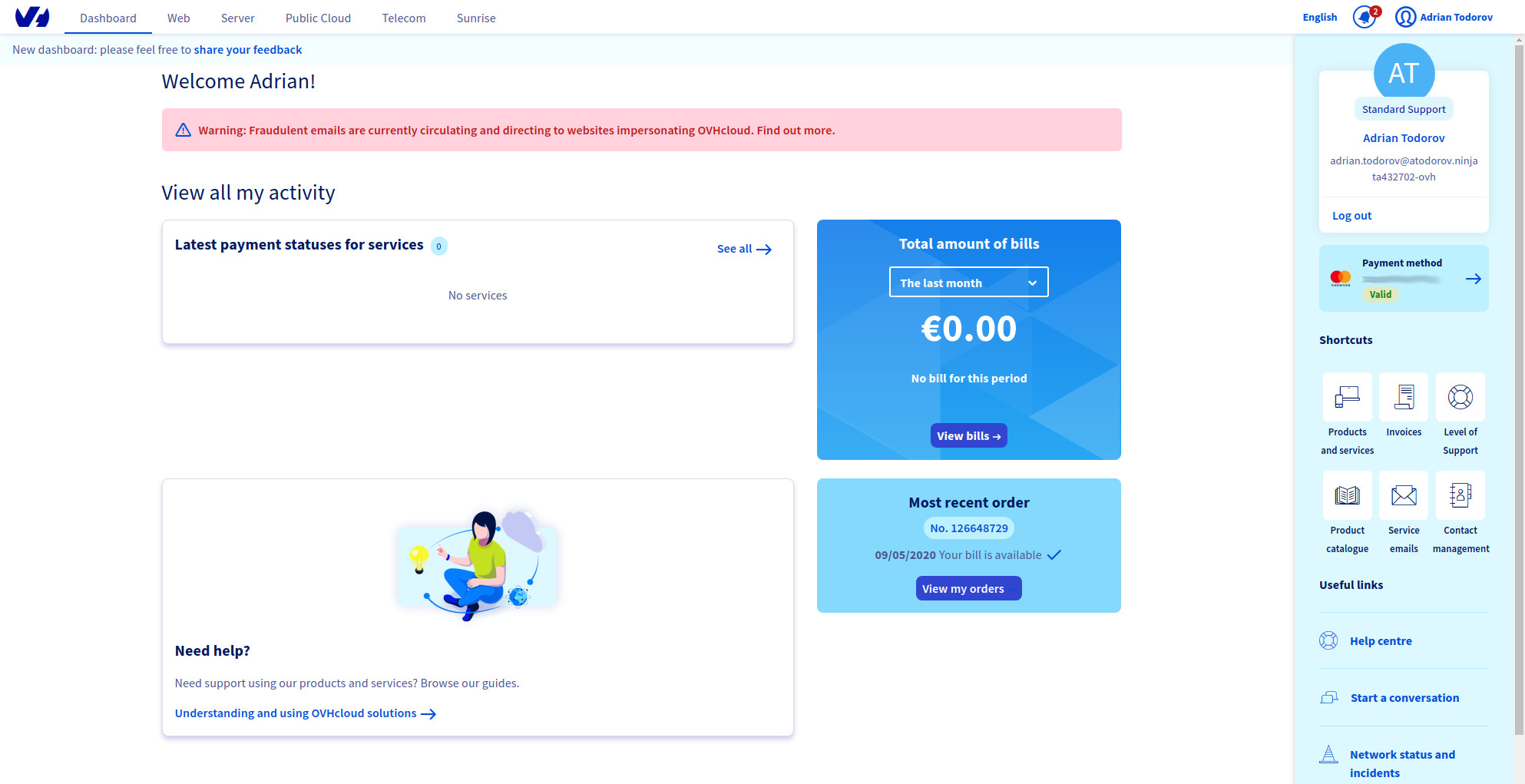

OVHCloud

Completely different thing. Their “console” (OVHCloud Control Panel or Manager), is actually a mix of a bunch of different consoles for different branches of products, and is painfully slow (each console takes ~10s to load when first accessing it) and chaotic. It absolutely shows they’re a bigger company with various product ranges that evolved weirdly over the years, yet they could probably organise them better. AWS have everything from basic compute through satellite communications to quantum computers and the AWS Console is much clearer. Things aren’t helped by the confusing naming schemes and product lines - Web, Server, Public cloud, Telecom, Sunrise are product lines/sub-consoles, with unclear, sometimes overlapping product names like Cloud Web and Hosting under Web; Private Cloud under Cloud and Public Cloud under Public cloud. Some services are referenced by different names throughout documentation, blog posts and consoles. It’s a complete mess and it requires some clicking around (which is slow) or reading documentation just to understand how to get to the parts that interest you, which only brings up more questions:

Cloud, Cloud Web, Private Cloud and Public Cloud are listed as separate products/product lines. If OVH Load balancer is under Cloud and Managed Kubernetes is under Public Cloud, does that mean I can’t use load balancers with their k8s service?

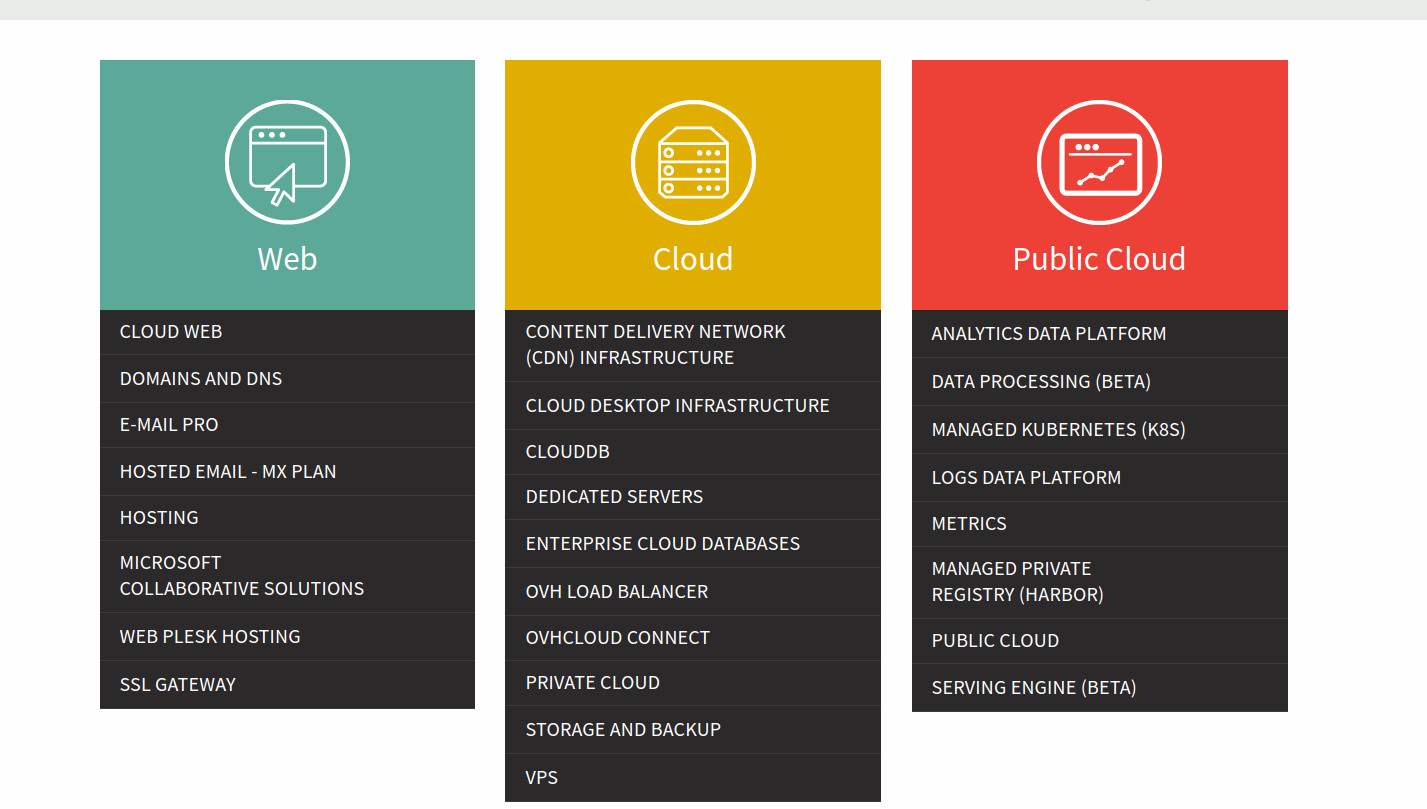

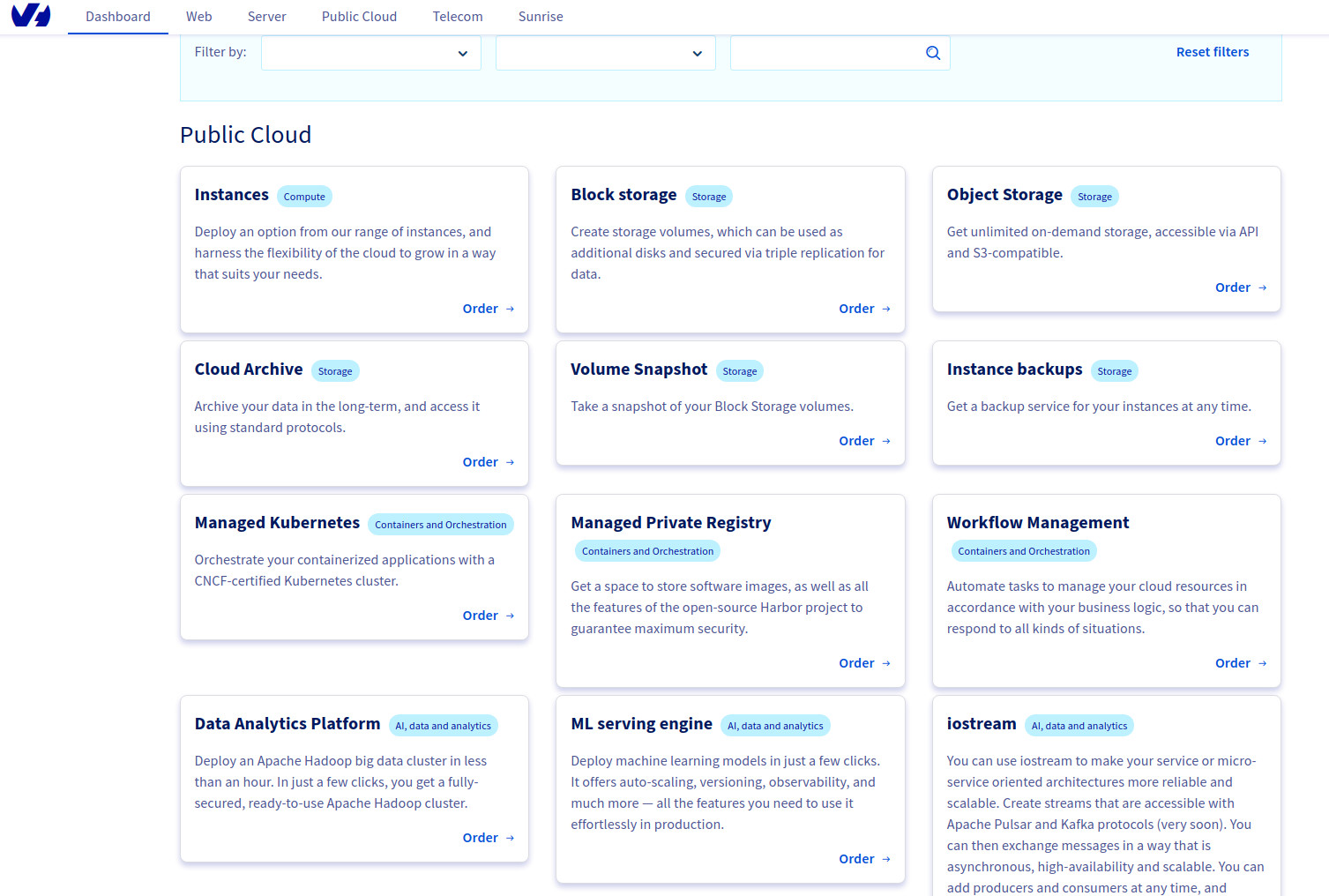

Thankfully they provide a Product catalogue that at least lists all services with descriptions and categories in a somewhat clear fashion:

It would appear that Private cloud/Hosted Private Cloud/SDDC is a VMware-centred offering, and Public Cloud is OpenStack-based (which is why you get a Horizon interface on top of the OVHCloud one), their Kubernetes service being based on the latter.

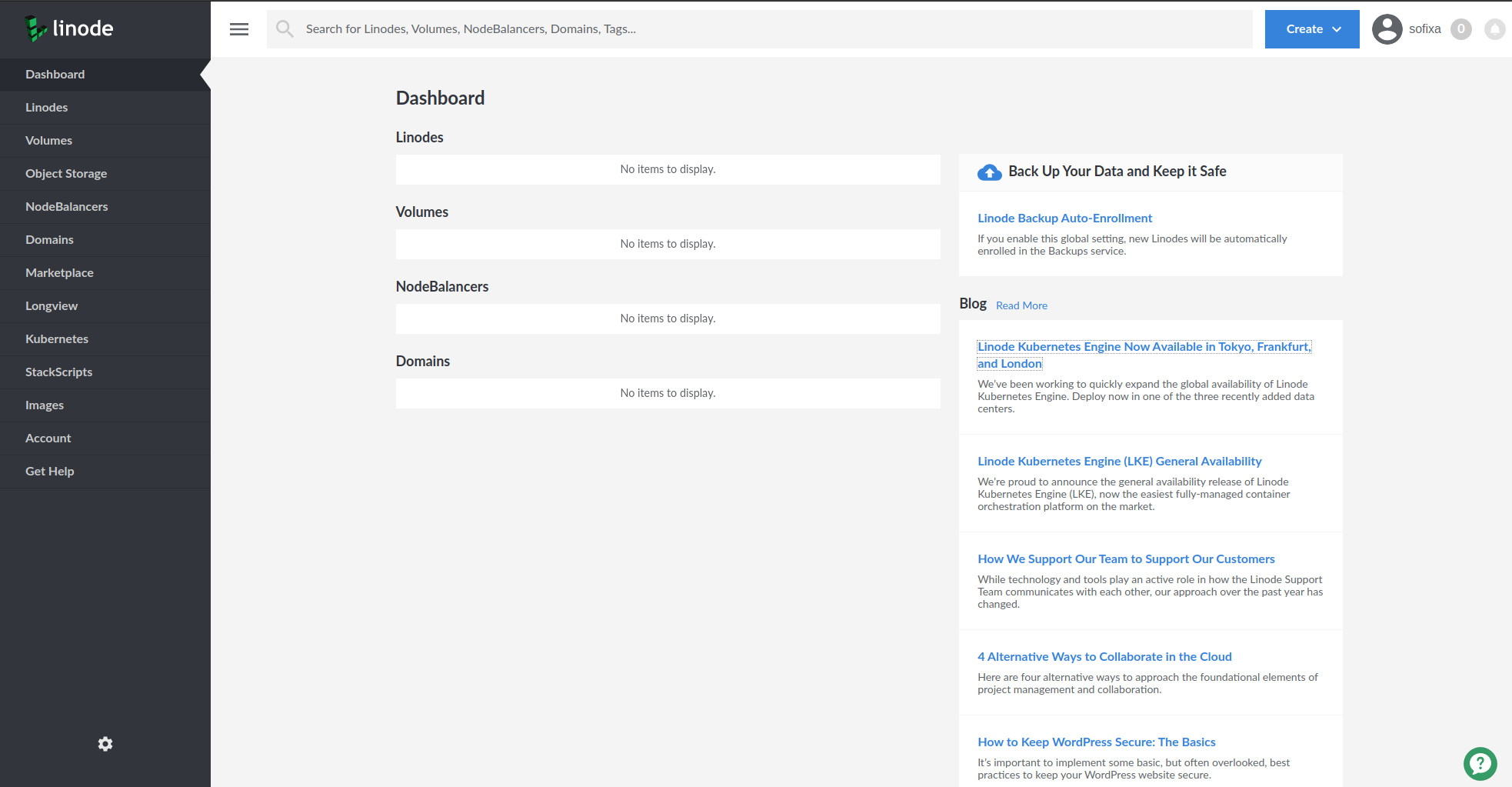

Linode

Back a to clean and nice interface. You get a quick glance of existing resources, an easy way to create new ones via “Create button”, a powerful search, a sidebar menu with all services and settings, and cost estimations on each creation. Similar to DO, each instance’s interface also has some basic graphs.

CLI & API

Digital Ocean

doctl, Golang-based (static binaries for many platforms, distribution via homebrew and snapd) CLI, somewhat resembles kubectl in organisation (doctl subject action, e.g. doctl kubernetes cluster list), and can do pretty much everything Digital Ocean related - listing, creating, updating various resources and related actions (like getting a k8s cluster’s kubeconfig, and even, for snapd installs, connecting/integrating it with kubectl and ssh), inlcuding billing related(getting invoices).

Their API covers pretty much everything and has libraries in multiple languages. There are great docs and guides.

Scaleway

scw, Golang-based (static binaries for many plaforms, distribution via homebrew and Chocolatey), is inspired by the docker CLI, only manages compute instances and is currently under heavy redevelopment (v1.2 -> v2, in beta, and also following the kubectl mantras) to handle the new Scaleway services (including Kapsule).

Their API covers all services and is well documented.

OVHCloud

OVH have no actively maintained CLI, ovh-cli being archived since ~2018.

There’s an API, and associated Python and PHP SDK’s which cover some scenarios, but, weirdly, the Kuberentes methods are deprecated. Apparently they were moved under the /cloud/ section. Docs are much more basic (OpenAPI-style descriptions of the parameters, little extra explications, context or examples ) than DO or Scaleway.

Linode

linode-cli is a Python-based (distibution and installation via PyPi) flexible CLI that allows creating/updating/deleting/listing pretty much any Linode resource (Linodes, domains, managed kubernetes, etc.).

Their API is fully featured, and they have libraries and tools for various languages and tasks.

Conclusion

All 4 have complete APIs, but Digital Ocean, Scaleway and Linode also have great tooling (libraries, SDKs, CLIs)

Terraform providers

All 4 have terraform providers with varying levels of maturity. Digital Ocean, Scaleway and Linode cover all of their services (including Kubernetes), while OVHCloud’s one doesn’t (somewhat understandable considering their complex web of services and products).

Kubernetes

Digital Ocean Kubernetes Service

Digital Ocean Kubernetes Service is currently available in 10 datacenters across 8 locations covering most of the world (North America, Europe and Asia). It supports recent-ish versions of Kubernetes (v1.17, the latest being v1.18), and new clusters can be created via the web interface, API, CLI and terraform. Node pools can be composed of Standard, CPU-Optimised, Memory-optimised or General Purpose droplets, and you can mix and match them as you want to fit different workloads. Cluster-autoscaler is available for dynamically sized node pools, but only when creating via terraform or doctl; via the web UI a node pool can only be modified, but not created, as autoscaling. Clusters come with cilium as the CNI plugin, a Kubernetes dashboard (with automatic SSO when coming from the DO web interface), automatic patches in maintenance windows (only patch versions, e.g. v1.17.0 to v1.17.1, but not upgrades like v1.17 to v1.18, those have to be manually launched), basic cluster and node-level metrics with some nice graphs, thanks to the their do-agent which runs on all nodes, which can even be used for basic alerting (only simple rules, metric X is above or below fixed value Y for duration Z on droplet or droplets by tag).

DO also provide a basic managed Container Registry, which is however in early availability and pricing is specific and fixed (you need to subscribe do a Digital Ocean Spaces(their object storage) plan at $5/month for 250GB of storage and 1TB outbound data transfer), but access is easy (you need a DO API token, which can be read-only or read/write) from within your CLI or Kubernetes cluster.

Digital Ocean’s cluster-autoscaler, Cloud Controller Manager (which allows the creation of Load balancers from within the cluster) and CSI plugin are all open source and available on GitHub to consult and contribute to. Documentation and how-to tutorials are top notch, and explain important bits like creating and configuring load balancers, adding block storage, etc.

Operations (cluster creation, upgrades) are pretty fast and usually complete within a few minutes.

All in all, you get a pretty complete and feature rich solution - the only thing I’d say is “missing” is log management, something one might take for granted coming from AWS/GCP/Azure.

Scaleway Kapsule

Scaleway Kapsule is currently only available in the Paris region, but stays very close to Kubernetes releases (v1.18.6, latest release, was available less than a day after being on GitHub), which is really rare, I haven’t found another provider so up to date (for reference, the closest, OVHCloud, are on v1.18.1, GCP are in preview on v1.17.6, and AWS are still on v1.16.8). Clusters can be created via the web UI, API and terraform, all with the same options, which are pretty rich:

- CNI (Container Network Interface) can be any one of

cilium(default),calico,weaveorflannel - ingress can be any of none (default),

nginxortraefik(versions 1.7 or 2.2), which can also be modified in-place after creation - autoscaling and autohealing of nodes

- nodes come in a variety of sizes from 3 different product lines, DEV (2-4 vCPUs and 2-12GB RAM), GP1 (General Purpose, 4-48 vCPUs and 16-256GB RAM) and RENDER (which include dedicated NVIDIA Tesla P100 16GB GPUs alongside 10 cores (half of an Intel Xeon Gold 6148))

There’s a default node pool and you can add extra ones later on, each with its own autoscaling configuration. Besides that, you can opt in Kapsule deploying a Kubernetes dashboard for you (in a dedicated namespace, following best practices). Every cluster also comes with a wildcard DNS you can use to test things, but there’s no monitoring/metrics/alerting included (besides metrics-server running on clusters by default), only docs about about how you can add them yourself. They do, however, have a basic managed container registry available, where storage is cheap (€0.025/GB/month) and networking is free within the same region (€0.03/GB/month outside), but access to a private instance of it requires a Scaleway API Token, each of which has full read/write access to the whole of your Scaleway account, so it’s not great, security-wise.

Scaleway’s Cloud Controller Manager and CSI plugin are also open source and available on GitHub, and their documentation and tutorials are also pretty great and detailed, and include important things like creating load balanacers, using Loki for logs management and plenty of others. Cluster upgrades are available via the API (and terraform).

Operations (creation, upgrades, scale ups and downs) are pretty fast.

Similar to Digital Ocean, you get a pretty complete and feature rich managed Kubernetes, with the main things “missing” being integrated monitoring/metrics/alerting and log management.

OVHCloud Managed Kubernetes Service

OVHCloud’s Kubernetes service is only available in two datacenters, in Canada and France (more to come in 2020), and, even if they keep up with Kubernetes versions faster than DO (v1.18.1 is latest available, and they promise they’ll have new versions available in the quarter after release), it works a bit differently, and not necessarily in a good way. Organisationally, Kubernetes clusters and nodes are under their Public Cloud services, and billing is under the respective Public Cloud project. You have to first create a cluster (control plane hosted by OVHCloud), and only after it’s created you can add individual nodes, each of which is rather slow (at least compared to DO and Scaleway) - ~5 minutes for the cluster creation (and apparently it’s a feature because they even notify you by email when your cluster has been created) and 5-10 minutes for each node. Node pools and autoscaling are on their official roadmap and are available as experimental features in their API. On that roadmap are also bare metal nodes, which can be great for performance. By default clusters are on the “maximum security” security policy, which means they get all patch updates automatically, and you can do upgrades manually (via the web interface or the API).

Nodes come in different flavors (sizes) and flavor families (CPU, RAM, IOPS (which come with dedicated NVMe drives)), but not all are available depending on location (e.g. at the time of writing, in Gravelins, France, anything with more 8vCPUs from the CPU optimised or 30GB RAM from the memory optimised wasn’t available, and there were no instances at all, of any type, from the IOPS-optimised family). Canal(calico for policy and flannel for networking) is used as the CNI plugin.

OVHCloud’s Managed Container Registry is far more advanced than Digital Ocean or Scaleway’s, since it’s based on CNCF’s Harbor project, and it includes detailed RBAC (Role-Based Access Control), vulnerability scans of images, and Helm chart hosting alongside OCI-compatible images, but pricing is a bit specific and inflexible - there are different tiers and you pay for a combination of storage (200GB, 600GB or 2TB) and number of concurrent connections (15, 45, 90), while networking is free. There’s no included by default monitoring/metrics/alerting or logging, but they have a (somewhat old, but probably still usable) doc on how to send metrics from your clusters to their OVH Observability/Metrics platform (based on Warp10), and another one about sending logs to their Logs Data Platform (based on Graylog). Their documentation is pretty decent, even if there are some losses in translation with French idioms/phrases slipping in the English versions.

Operations feel slow - on top of the aforementioned 5-10 minutes per adding a node, a load balancer takes around the same time to create, and even the Kubernetes API sometimes takes a bit more to respond than usual. OVHCloud run Kubernetes on top of OpenStack and probably use the official cloud-provider-openstack and the associated cloud controller manager and Cinder CSI plugin.

Overall, it’s hit and miss. There are some great things about OVHCloud’s Kubernetes service, mostly in related services (Harbor is a great registry, there are fully featured metrics and logs platforms), but the Kubernetes itself is direly missing in even basic features like node pools, and the pricing on those related services is on static plans (instead of pay per use) and in general everything about it feels slow and clunky. At least their roadmap is public and they intend to improve some of the major downfalls, and hopefully work on the speed and UX.

Linode Kubernetes Engine

Linode’s LKE is available in 10 locations across North America, Europe and Asia Pacific (and they even provide a handy speed test page to help you pick the closest region), and, similar to Digital Ocean, have a relatively recent version of Kubernetes (v1.17.x, the latest being v1.18.x) available. Clusters can be created via terraform, web interface, CLI or API, come with calico as the CNI and their node pools can be composed of nodes from the Standard (1-32 vCPUs, 2-192GB RAM), Dedicated (2-48 dedicated virtual cores, 4-96GB RAM) or High Memory (1-16 CPU, 24-300GB RAM) ranges; sadly their GPU instances are not available. There are basic graphs per instance (CPU, IPv4 and IPv6 network traffic, and Disk I/O) coming from their Longview agent, which is deployed on all Kubernetes nodes, which can then also be turned into basic alerting (enabled by default with sane thresholds), as well as decent audit logs.

There’s no cluster (node) autoscaling, no cluster upgrades (v1.16 to v1.17), and no node patches/upgrades, only automatic control plane patches by Linode. And even though they don’t provide a managed Docker registry, they have a how-to guide on how to set up one with their Object Storage and the Docker registry Helm chart, and, of course, if you’re going to run one yourself, you can always install Harbor for the extra features.

Their documentation is pretty detailed and of good quality, and so are their open source tools (CLI, SDK, API, terraform provider, cloud controller manager, CSI plugin).

Operations are pretty fast (and you can even quantify it thanks to their logs, which show it took ~60s for an instance to become available).

Overall, LKE is decent but lacking some very important features (cluster upgrades and autoscaling).

Features comparison

Legend

- feature/service present and in GA

- feature/service present, in GA, and much more advanced than competitors'

- feature/service present in beta

- feature/service not present

- feature/service on roadmap

- feature available, but it costs extra or is in a separate service billed separately

| Feature | Digital Ocean | Scaleway | OVHCloud | Linode | |

|---|---|---|---|---|---|

| Up to date Kubernetes | v1.17 | v1.18 | v1.18 | v1.17 | v1.17 in preview |

| Free control plane | only for the first zonal cluster | ||||

| Kubernetes dashboard | optional | replaced by a GKE dashboard | |||

| Ingress Controller | optional, Nginx/ Traefik v1 or v2 | optional, GKE Ingress | |||

| Bare metal Instances | |||||

| GPU Instances | |||||

| Cluster Autoscaler | |||||

| Cluster Patches (v1.17.x) | |||||

| Automatic Cluster Patches | |||||

| Cluster Upgrades (v1.xx) | |||||

| Metrics | |||||

| Logs | |||||

| API | |||||

| Terraform provider | |||||

| IAM/ Team management | |||||

| CNI plugin | cilium | cilium, calico, weave or flannel | canal | calico | GKE custom or calico |

| VPC (Private network) | |||||

| Unlimited network transfer | except for SYD and SGP* | ||||

| IPv6 dualstack | |||||

| Multi-zone/ datacenter clusters |

* “Outbound public traffic is also free and unlimited, with the exception of the Singapore (SGP) and Sydney (SYD) regions, where 1,024 GB/month per project, per datacentre is available. Each additional GB will be charged for” Source

| Additional Services | Digital Ocean | Scaleway | OVHCloud | Linode | |

|---|---|---|---|---|---|

| Block storage | |||||

| Object Storage | Spaces | Object Storage | Object storage (based on Swift) | Google Cloud Storage | |

| Archival Storage | C14 | Cloud Archive | Cloud Storage for data archiving | ||

| Load Balancer | |||||

| Managed Container Registry | basic | basic | Harbor | GCR | |

| Managed Databases | PgSQL, MySQL, Redis | PgSQL, MySQL* | PgSQL, MySQL, MariaDB, Redis | a lot | |

| Secrets Management | Secret Manager | ||||

| Managed Message broker | IoT Hub (MQTT) | ioStream | Pub/Sub | ||

| Serverless (Functions) | Knative | Cloud Functions | |||

| Serverless (Containers) | Knative | Cloud Run | |||

| AI | AI Inference | Data & Analytics | Cloud AI | ||

| Data Warehouse | Data Analytics Platform (based on Hadoop) | BigQuery | |||

| Data Processing | Data Processing (based on Spark) | Dataprep/ Dataproc/ Dataflow |

* Only PgSQL is currently publicly available as a managed database by Scaleway, MySQL is in private beta

Pricing

All four providers have relatively simple pricing when it comes to their managed Kubernetes services - the control plane is free, you only pay for compute, memory, storage and networking (load balancers, extra public IPs) you consume, which are billed with hourly granularity (practical for autoscaling). There’s however a caveat with some of them - Digital Ocean and Linode have an account-level monthly public network transfer cap based on amount and size of instances you run (starting at 1TB/droplet for DO and 2TB/linode for Linode, per full month), and anything on top is billed ($0.01/GB). Scaleway and OVHCloud have free network transfer, with the latter having an exception for instances located in their Singapore and Sydney datacenters, which are capped at 1024 GB/project/month (but they don’t have the Kubernetes service anyway).

Compute

Back in April Scaleway posted this comparison in prices between them and competitors (i checked the numbers and they aren’t lying):

(Linode are missing, but their pricing is identical to Digital Ocean’s - and I mean that literally, the only difference in Linode Standard Plans vs Standard Droplets is the network transfer each instance adds to your cap - compute, memory, even local storage space are the same and with the same price)

Note Pricing is in USD regardless of location for Digital Ocean and Linode, in EUR for Scaleway, and OVHCloud propose different currencies depending on your location (EUR in Eurozone countries, PLN in Poland, CAD in Canada, etc.) The course at the time of writing is 1 USD = 0.89EUR | 1 EUR = 1.13 USD , but that doesn’t change the scale too drastically because the difference between Scaleway and OVHCloud, Digital Ocean/Linode, and then AWS/GCP/Azure is too big. In further comparisons, I’ll use prices in their original currency, as billed, and in parenthesis convert the values to euros/US dollars using today’s course.

And it’s a great comparison, which shows they’re slightly cheaper pretty much the same with their price hike starting 01/08/2020 when it comes to General Purpose instances compared to OVHCloud, and very cheaper compared to DO/Linode, and even more so to AWS/GCP/Azure. However they provide little flexibility in terms of CPU/RAM combinations, so if one requires a different ratio, they might not be the best option.

Storage

Block Storage

| Provider | Storage class | Price per GB/month |

|---|---|---|

| Digital Ocean | SSD, 800-5000 IOPS, 20-200 MB/sec depending on block size | $0.10 (€0.089) |

| Scaleway | SSD, 5000 IOPS | €0.08 ($0.09) |

| Scaleway | SSD+, 10000 IOPS (coming mid-2020) | €0.12 ($0.14) |

| OVHCloud | Classic, 250 IOPS guaranteed | €0.04 / 0.06 CA$ ($0.045 ) |

| OVHCloud | High speed, up to 3000 IOPS | €0.08 / 0.138 CA$ ($0.09) |

| Linode | up to 5000 IOPS, 150MB/s | $0.10 (€0.089) |

Scaleway seem to have the best price/performance rate, Linode and Digital Ocean are again very similar, and OVHCloud are in a category of their own with very cheap but very slow storage, and a “high speed” class which is slower than the competition but more acceptable.

Object storage

| Provider | Storage included | Additional storage cost GB/month | Outbound Transfer included | Additional Outbound Transfer GB/month | Basic price per month |

|---|---|---|---|---|---|

| Digital Ocean | 250GB | $0.02 (€0.018) | 1TB | $0.01 (€0.0089) | $5 (€4.45) |

| Scaleway | 75GB | €0.01 ($0.011) | 75GB | €0.01 ($0.011) | 0 |

| OVHCloud | 0 | €0.01 / 0.015 CA$ ($0.011) | 0 | €0.01 / 0.015 CA$ ($0.011) | 0 |

| Linode | 250GB | $0.02 (€0.018) | 1TB | $0.01 (€0.0089) | $5 (€4.45) |

Once again, Scaleway are cheaper than everyone else (to be fair, they have the same prices as OVHCloud, but they have a decent free tier of 75GB of storage and 75GB transfer/month to start), and Digital Ocean and Linode have identical pricing.

Networking

Load balancers

| Provider | Load balancer class | Price per month |

|---|---|---|

| Digital Ocean | Load balancer | $10 (€8.90) |

| Scaleway | LB-GP-S, 200Mbps | €8.99 ($10.11) |

| Scaleway | LB-GP-M, 500Mbps | €19.99 ($22.47) |

| Scaleway | LB-GP-L, 1Gbps, Multicloud | €49.99 ($56.20) |

| OVHCloud | Network Load Balancer | €10 / 16 CA$ ($11.24) |

| Linode | Nodebalancer | $10 (€8.90) |

Digital Ocean and Linode, with identical pricing once more, come out slightly cheaper than Scaleway, which are the only ones with different tiers and guaranteed throughput and OVHCloud.

Network transfer

As I already mentioned, OVHCloud and Scaleway have unlimited (in volume) network transfer, while Linode and Digital Ocean apply an account-wide cap based on number and type of instances (starting from 1TB/droplet for DO, 2TB/ Linode for Linode), and anything extra costs $0.01/GB.

SLA & Support

| Provider | Support tier | SLA | Response time | Price per month |

|---|---|---|---|---|

| Digital Ocean | Basic | 99.99% droplets and block storage | No guarantee | 0 |

| Scaleway | Basic | 99.9% network, 99.99% instances | No guarantee | 0 |

| Scaleway | Developer | 99.9% network, 99.99% instances | <12 hours | €2.99 |

| Scaleway | Business | 99.9% network, 99.99% instances | <2 hours | “Starting at €49” |

| Scaleway | Enterprise | 99.9% network, 99.99% instances | <30 minutes | “Starting at €499” |

| OVHcloud | Standard | 99.999% instances | No guarantee, ~8 hours (working hours) | 0 |

| OVHcloud | Premium | 99.999% instances | No guarantee, ~2 hours (working hours) | €50 / 72 CA$, 12 months minimum |

| OVHcloud | Business | 99.999% instances | 30mn - 8 hours depending on severity (24/7) | “starting at” €250 / 360 CA$ |

| OVHcloud | Enterprise | 99.999% instances | 15 minutes for P1 (24/7) | “starting at” €5000 / “contact us” |

| Linode | Basic | 99.99% for all services | No guarantee | 0 |

| AWS | Basic | 99.99% for EC2 | No guarantee | 0 |

| GCP | Basic | 99.5% for a single GCE instance | No guarantee | 0 |

You can calculate how much is 99.9% with uptime.is

OVHCloud and Scaleway are the only ones to offer business suport, and the former have the best SLA (99.999%, better than AWS EC2 at 99.99 or GCP at 99.5, which, to be fair, make it easier to run multi-zonal/regional, so an individual instance’s availability is of lesser importance), compared to everyone else’s 99.99%.

Conclusion

Overall, it’s a pretty close match between Scaleway and Digital Ocean, with the latter beating based on availability (8 locations vs 1), but the former edging on features alone, and then some more based on pricing. OVHCloud simply aren’t ready yet (no node pools and no autoscaling are deal breakers) and with a poor UX and tooling, while Linode is too basic for most non-test workloads (recreating clusters to upgrade Kubernetes versions is too cumbersome) while costing almost exactly the same as DO.

Of course, neither of them are on par with AWS or GCP in terms of geographical locations or services, and probably never could be. Nevertheless, they’re more than sufficient for lots of workloads, hitting a sweet spot of simple, cost-effective and feature-rich (or at least having the basic building blocks for those features - thanks to Operators running pretty much anything yourself is getting easier), while still using widely available standards (Kubernetes) that allow mobility across cloud providers, if the needed arises.